TL;DR

Security teams lack visibility into how AI agents truly behave in production. Miggo Security’s AI Runtime Observability maps agents, models, and tools as they execute, giving you runtime context that is needed to enforce safety and prevent drift. Dive into the who, what, where, and how in this blog.

The New Reality (and Risks) of AI in Production

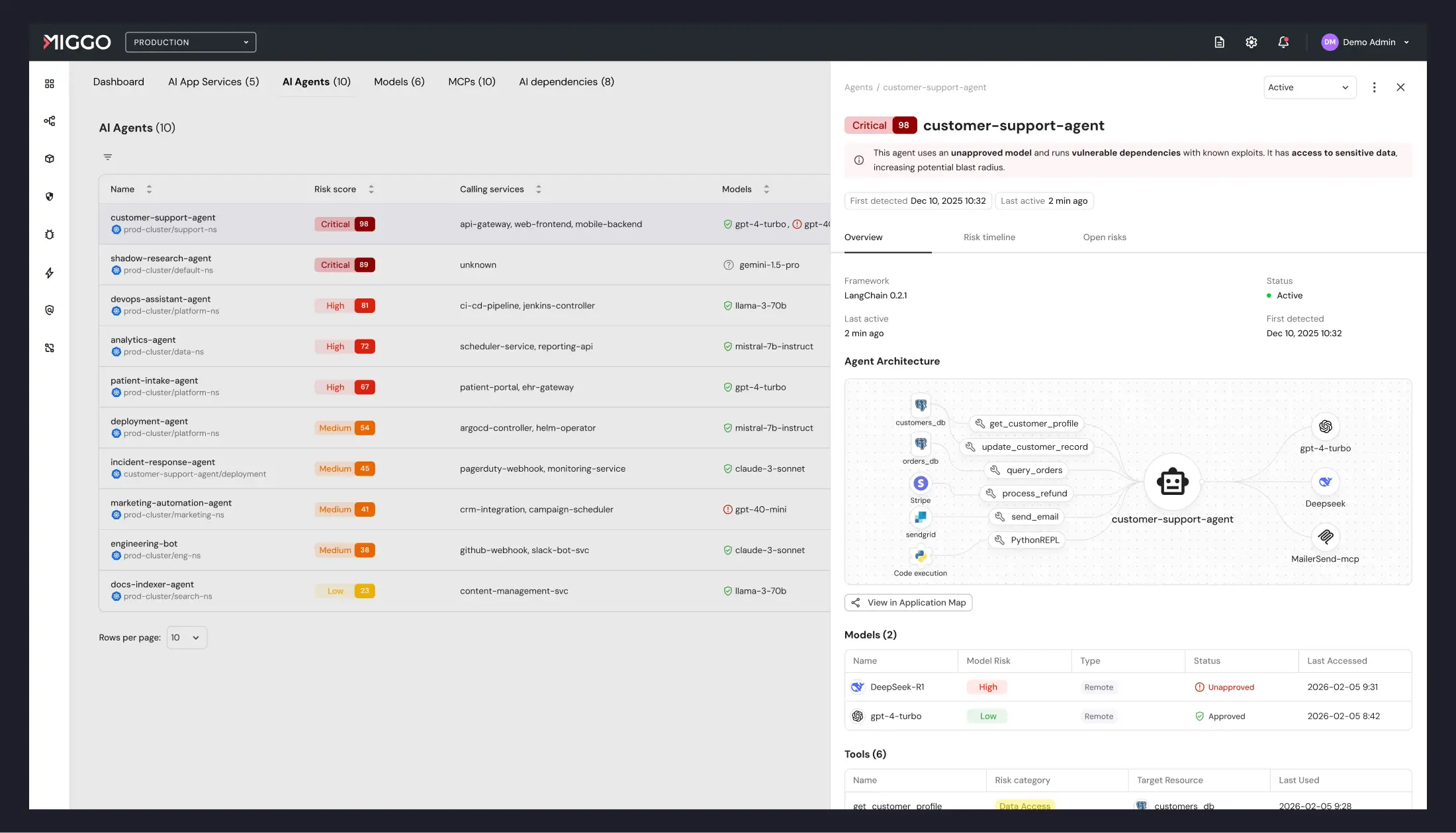

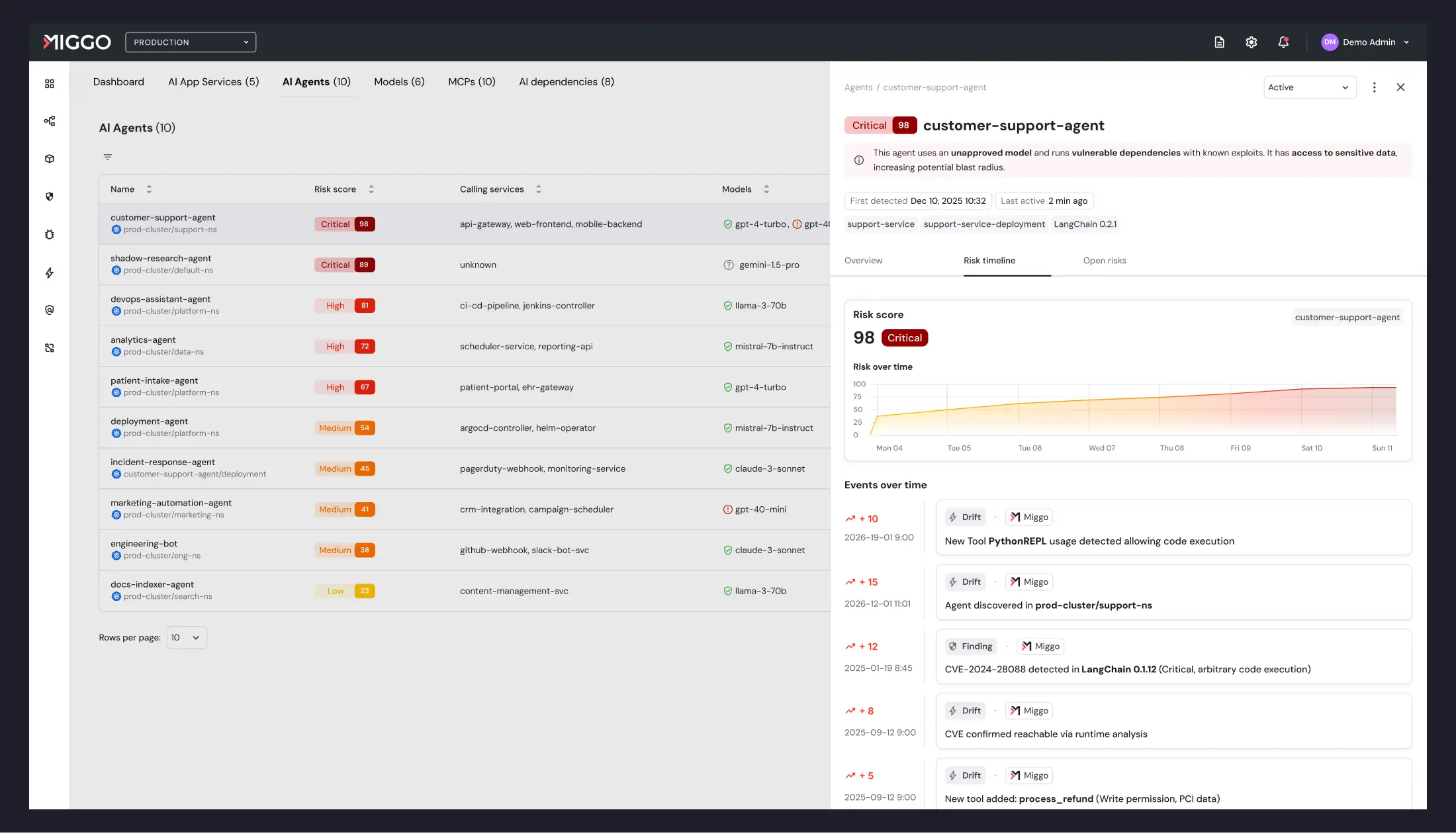

Three weeks ago, a customer support agent was deployed with GPT-4, read-only database access, and three approved tools. Today, it’s running DeepSeek-R1, has write access to two additional databases, and invokes seven tools—including a Python interpreter. The organization’s risk profile changed fundamentally. But no alert was fired and no review happened. In fact, no one noticed.

Unlike traditional software, where dependencies can be mapped from code before deployment, AI and agentic applications assemble their attack surface dynamically. Developers swap models to optimize costs. Tools get added to solve immediate problems. MCP integrations expand capabilities on the fly.

The risk isn't malicious; it is architectural. Because the design of AI applications and the interaction between components are non-deterministic, the risks are obscured by the very flexibility that makes these tools powerful. None of these runtime changes go through a standard security review, creating a massive blind spot.

The Visibility Problem

Traditional security maps the attack surface at deployment: scan the code, review the permissions, and ship to production. AI and agentic applications break that model completely.

- The agent decides at runtime which tool to invoke.

- The model gets swapped without changing the codebase.

- The execution flow from last Tuesday is different from today.

The team cannot write a policy for behavior that didn't exist when the app was deployed, nor can they audit access to data sources connected after the fact.

Presenting Runtime Truth

Teams can’t write policies for behavior that didn’t exist when an application was deployed—they need visibility while the application is running. And security teams can’t protect what they can’t see. Today, most organizations are effectively blind to their agentic attack surface.

Runtime truth is the only defense capable of addressing AI and agentic risks as they emerge in production.

That’s why we’ve extended Miggo’s patent-pending DeepTracing™ technology, already the most comprehensive approach to runtime truth, to include agentic execution profiling, and combined it with RuntimeDNA, Miggo’s live representation of application logic and execution paths.

Together, DeepTracing™ and RuntimeDNA create a continuously updated, evidence-based view of AI applications and agents actually behave in production: how agents execute, which models and tools they invoke, and what data they can reach.

With this runtime truth embedded at the heart of AI-BOM & Agentic Guardrails, Miggo enables teams to observe, understand, and govern AI systems based on real behavior – not assumptions made at deployment time.

Deep Dive into Miggo Security’s AI Runtime Observability

Who

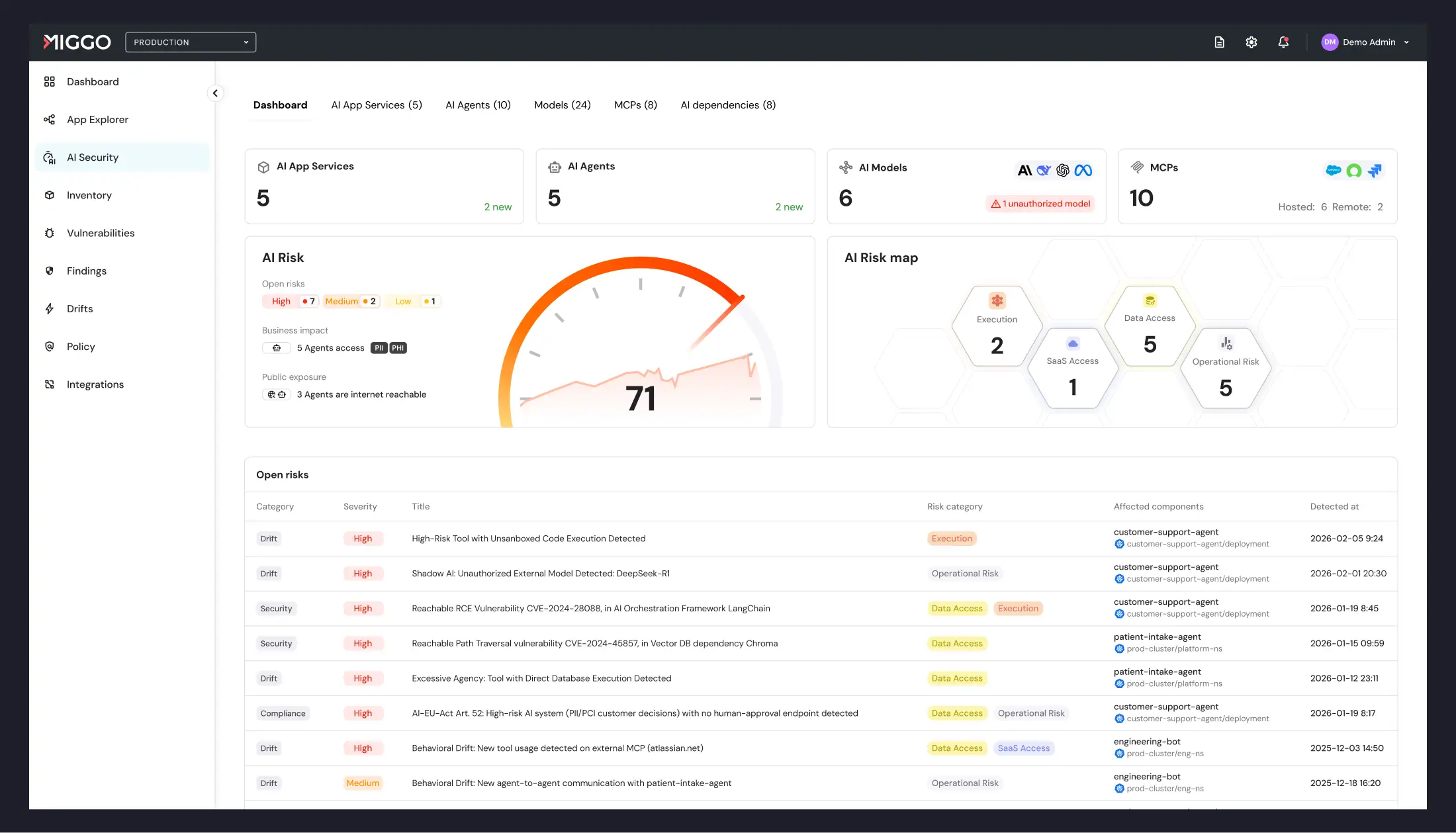

Expose AI components' sprawl across the environment with Miggo's AI-BOM.

- Automated AI Discovery — Continuously map your agentic environment from runtime execution: agents, models, MCP integrations, tools, frameworks, and data sources.

What

See what the agents are actually doing.

- Runtime Security Findings — Detect exploitable vulnerabilities with real context: active CVEs, reachable execution paths, unapproved models with data access, and dangerous tool usage.

- AI Reasoning MAP — Contextual mapping of AI execution flow, from initiation, through iterative reasoning steps and model inference, to tool execution.

- Compliance Monitoring — Enforce internal governance and external regulations like the EU AI Act with runtime evidence.

Where

Map blast radius and which assets (tools, data, MCPs) they can reach.

- Risk Scoring by Blast Radius — Prioritize risk based on data access, system impact, and internet reachability

How

Track and get alerted on behavior changes.

Behavioral Drift Detection — Track changes in models, tools, and data access over time. Review, approve, or reject drift before it becomes risk.

What’s Ahead

This release is just the beginning. Miggo’s AI Security roadmap extends beyond Runtime Observability to include active detection and prevention of AI-native attacks. Stay tuned for upcoming features regarding prompt injection, malicious tool invocation, poisoned embeddings, and compromised models.

Ready to gain visibility and control into your AI and agentic security? Contact us today to learn more.

.jpg)

.jpg)